AI Public Dialogues

Back to projectsProject Overview

In September 2024, ai@cam convened public dialogue workshops in Liverpool and Cambridge to better understand public perspectives on the role of AI.

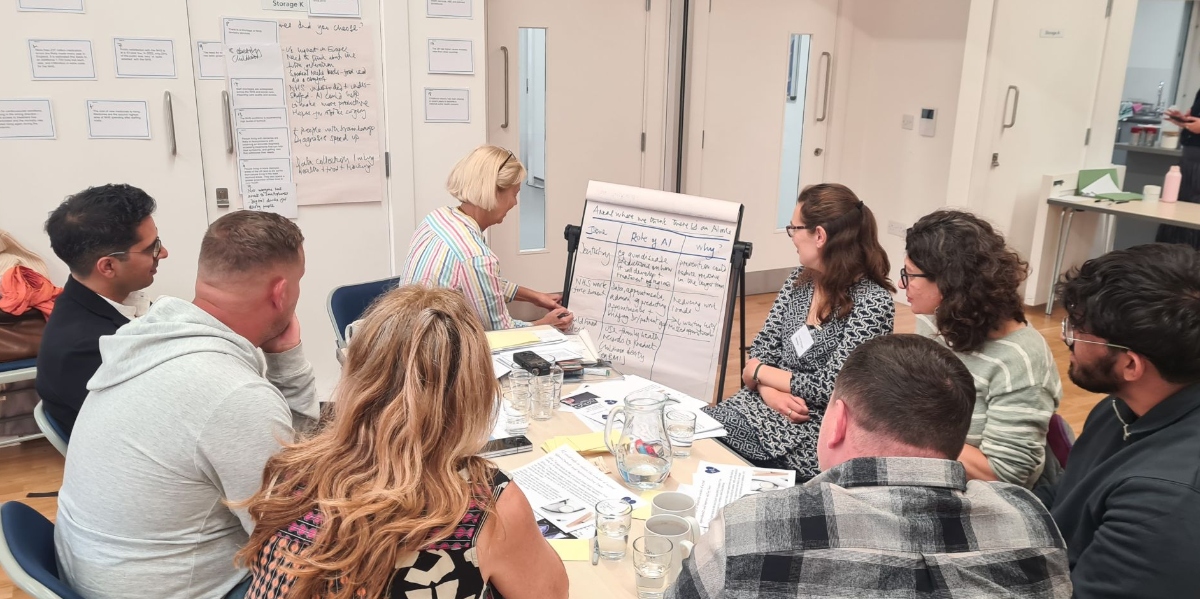

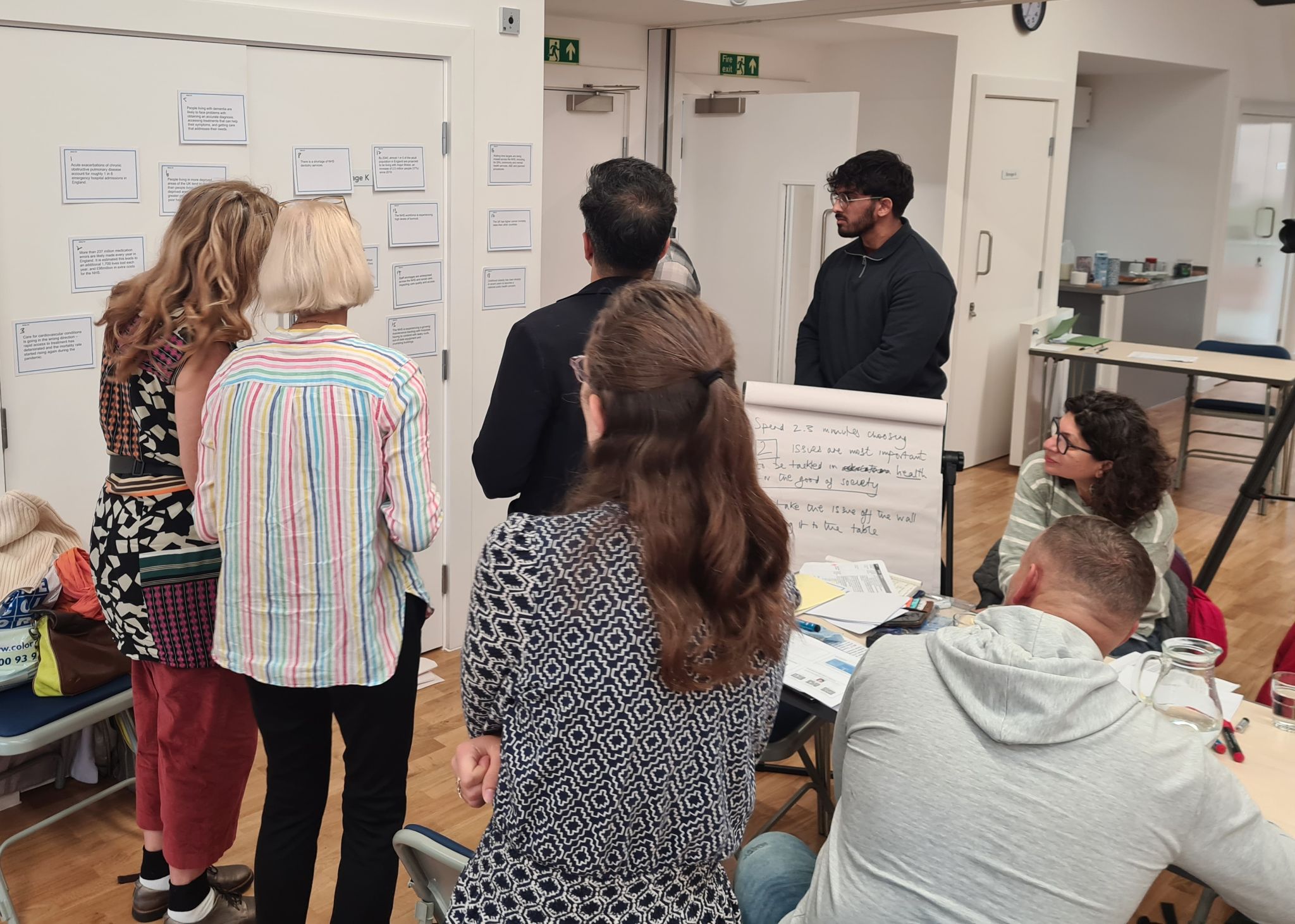

As part of a collaboration between ai@cam and the Kavli Centre for Ethics, Science, and the Public, 40 members of the public took part in two workshops in Liverpool and Cambridge to discuss their aspirations for AI in public services and the interventions needed to shape its development.

Designed and delivered by the specialist social research agency Hopkins Van Mil, these dialogues set out to understand public perspectives on the role of AI in delivering priority policy agendas connected to four of Labour’s Missions for Government: crime and policing, education, health, and energy and net zero.

Along with AI experts from the University of Cambridge, University of Liverpool, King’s College London, and University of Manchester, public participants were divided into smaller groups dedicated to one of the four government missions. Guided by a prepared list of discussion topics and supported by an AI expert, participants considered the guardrails and possible interventions needed to ensure AI delivers public benefit.

Findings from those conversations have been revealed today in a new ai@cam report that gives the first analysis of how the public think that AI could help deliver the Missions for Government.

The report outlines the opportunities for AI to improve people’s interactions with public services and shows there are growing demands for governance that centres public benefit in AI development and use.

Participants in this dialogue called for:

- Further transparency around where and how AI is being used, supported by education and public information initiatives that help build understanding of AI.

- Governance that ensures public benefit is the guiding principle for the use of AI in any public services, backed up by powers to prevent profit being prioritised over service quality.

- Independent regulatory bodies that are equipped with the power to influence how and where AI is used, and that can take action to prevent misuse.

- Collaborative and user-centred design practices that centre user need in AI development, and that take action to remove bias and improve reliability.

The findings from our dialogues offer insights into a future vision for the use of AI in public services in the UK. They suggest a roadmap that can support policymakers in stewarding the development of AI technologies and we hope this work informs a continuing conversation about how we can drive AI innovations that deliver widespread public benefit.

We would like to thank all our project collaborators including those from University of Cambridge, University of Liverpool, University of Manchester, and King’s College London, Hopkins Van Mil and the time and insights from our public participants.

Read the AI Public Dialogue Report

Related Content

A future vision for AI: public perspectives on the role of AI and the Missions for Government

Using AI for public benefit: insights from our public dialogues on AI and the Missions for Government

AI Public Dialogues: Understanding Public Perspectives on AI in Government Missions